Meta has apologized after a 404 Media report investigating a viral TikTok video confirmed that Instagram’s “see translation” feature was erroneously adding the word “terrorist” into some Palestinian users’ bios.

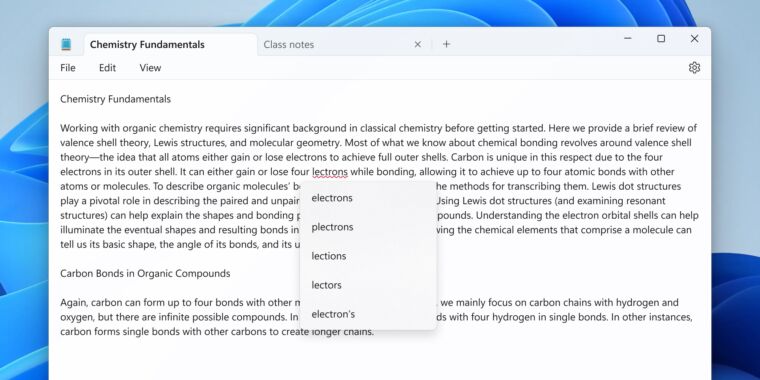

Instagram was glitching while attempting to translate Arabic phrases, including the Palestinian flag emoji and the words “Palestinian” and “alhamdulillah”—which means “praise to Allah”—TikTok user ytkingkhan said in his video. Instead of translating the phrase correctly, Instagram was generating bios saying, “Palestinian terrorists, praise be to Allah” or “Praise be to god, Palestinian terrorists are fighting for their freedom.”

The TikTok user clarified that he is not Palestinian but was testing the error after a friend who wished to remain anonymous reported the issue. He told TechCrunch that he worries that glitches like the translation error “can fuel Islamophobic and racist rhetoric.” It’s unclear how many users were affected by the error. In statements, Meta has only claimed that the problem was “brief.”

“Last week, we fixed a problem that briefly caused inappropriate Arabic translations in some of our products,” Meta’s spokesperson told Ars. “We sincerely apologize that this happened.”

Not everyone has accepted Meta’s apology. Director of Amnesty Tech—a branch of Amnesty International that advocates for tech companies to put human rights first—Rasha Abdul-Rahim, said on X that Meta apologizing is “not good enough.”

This is not good enough @instagram. The fact bios that incl “Palestinian”, 🇵🇸, and “alhamdulillah”were translated as “Palestinian terrorist” is not a bug – it’s a feature of your systems. Just as “Al Aqsa mosque” was designated a “terrorist” org in 2021.https://t.co/M5mAUNmP6v

— Rasha Abdul-Rahim 🇵🇸 (@Rasha_Abdul) October 20, 2023

Abdul-Rahim said that the fact that “Palestinian,” the Palestinian flag emoji, and “alhamdulillah” were translated as “Palestinian terrorist” is “not a bug,” but “a feature” of Meta’s systems. Abdul-Rahim told Ars that the translation error is part of a larger systemic issue with Meta platforms, which for years have allegedly continually censored and misclassified Palestinian content.

Amnesty International began monitoring these issues in 2021 when the organization began receiving reports of content and account takedowns on Facebook and Instagram after Israeli police attacked Palestinian protesters in Sheikh Jarrah, a predominantly Palestinian neighborhood in East Jerusalem. Amnesty International and several Palestinian civil society organizations soon found that hundreds of pro-Palestinian accounts were being blocked from livestreaming or having posts’ views restricted or posts removed entirely. Some users’ accounts were being flagged for “content simply sharing information about events in Sheikh Jarrah,” or recommending things like “people to follow and information resources,” Abdul-Rahim told Ars, while other content was “getting labeled as ‘sensitive’ and potentially upsetting.”

After that 2021 controversy, Meta commissioned a report by a third party that “concluded that the company’s actions had an ‘adverse human rights impact’ on Palestinian users’ right to freedom of expression and political participation,” TechCrunch reported.

Abdul-Rahim told Ars that it’s clear that Meta did not take enough steps to adequately fix the issues reported then, which seem to mirror issues still being reported today.

“Now fast-forward to the current context of the Israel-Gaza conflict, we are seeing the same issues,” Abdul-Rahim told Ars. The New York Times recently reported claims from thousands of Palestinian supporters, saying that “their posts have been suppressed or removed from Facebook and Instagram, even if the messages do not break the platforms’ rules.” Agreeing with Abdul-Rahim, experts told TechCrunch that the problem wasn’t a bug but a “moderation bias problem,” labeling Meta the “most restrictive” platform that has historically been guilty of “systemic censorship of Palestinian voices.”

Meta denied censoring Palestinian supporters

In a blog, Meta denied that the company was restricting pro-Palestinian content. The company—which, like most platforms, has banned content supporting Hamas, a globally recognized terrorist organization—said that platforms have recently “introduced a series of measures to address the spike in harmful and potentially harmful content spreading on our platforms,” but promised that platforms “apply these policies equally around the world” and insisted that “there is no truth to the suggestion that we are deliberately suppressing voices.”

The blog reported two bugs that Meta said have now been fixed. One stopped some accounts’ posts and Reels that were shared as Stories from “showing up properly,” which led “to significantly reduced reach” of posts. Another bug “prevented people from going Live on Facebook for a short time.”

The blog discussed other reasons pro-Palestinian content might have reduced reach on platforms, including Meta’s reliance on technology “to avoid recommending potentially violating and borderline content across Facebook, Instagram, and Threads.” Meta noted that any content that “clearly identifies hostages” is also being removed, “even if” it’s being shared “to condemn or raise awareness” of the hostage situation. Platforms are also blocking hashtags when they are overrun by violative content, which could reduce the reach of non-violative content attempting to use the same hashtags. It’s also possible that many users have used controls to see less sensitive content.