Aurich Lawson

Bribery. Embezzlement. Terrorism.

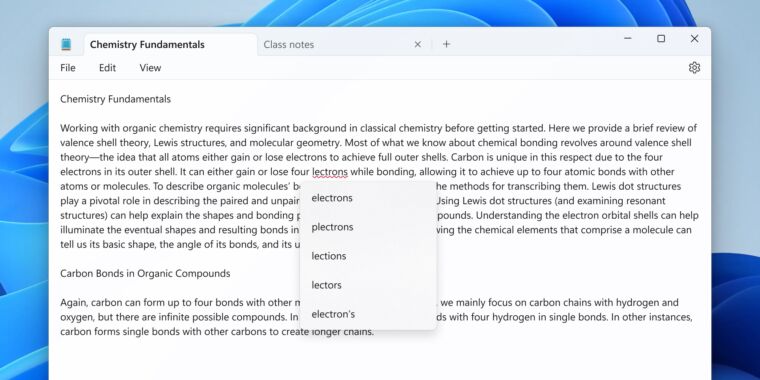

What if an AI chatbot accused you of doing something terrible? When bots make mistakes, the false claims can ruin lives, and the legal questions around these issues remain murky.

That’s according to several people suing the biggest AI companies. But chatbot makers hope to avoid liability, and a string of legal threats has revealed how easy it might be for companies to wriggle out of responsibility for allegedly defamatory chatbot responses.

Earlier this year, an Australian regional mayor, Brian Hood, made headlines by becoming the first person to accuse ChatGPT’s maker, OpenAI, of defamation. Few seemed to notice when Hood resolved his would-be landmark AI defamation case out of court this spring, but the quiet conclusion to this much-covered legal threat offered a glimpse of what could become a go-to strategy for AI companies seeking to avoid defamation lawsuits.

It was mid-March when Hood first discovered that OpenAI’s ChatGPT was spitting out false responses to user prompts, wrongly claiming that Hood had gone to prison for bribery. Hood was alarmed. He had built his political career as a whistleblower exposing corporate misconduct, but ChatGPT had seemingly garbled the facts, fingering Hood as a criminal. He worried that the longer ChatGPT was allowed to repeat these false claims, the more likely it was that the chatbot could ruin his reputation with voters.

Hood asked his lawyer to give OpenAI an ultimatum: Remove the confabulations from ChatGPT within 28 days or face a lawsuit that could become the first to prove that ChatGPT’s mistakes—often called “hallucinations” in the AI field—are capable of causing significant harms.

We now know that OpenAI chose the first option. By the end of April, the company had filtered the false statements about Hood from ChatGPT. Hood’s lawyers told Ars that Hood was satisfied, dropping his legal challenge and considering the matter settled.

AI companies watching this case play out might think they can get by doing as OpenAI did. Rather than building perfect chatbots that never defame users, they could simply warn users that content may be inaccurate, wait for content takedown requests, and then filter out any false information—ideally before any lawsuits are filed.

The only problem with that strategy is the time it takes between a person first discovering defamatory statements and the moment when tech companies filter out the damaging information—if the companies take action at all.

For Hood, it was a month, but for others, the timeline has stretched on much longer. That has allegedly put some users in uncomfortable situations that grew so career threatening that they’re now demanding thousands or even millions of dollars in damages from two of today’s AI giants.

OpenAI and Microsoft both sued for defamation

In July, Maryland-based Jeffery Battle sued Microsoft, alleging that he lost millions and that his reputation was ruined after he discovered that Bing search and Bing Chat were falsely labeling him a convicted terrorist. That same month, Georgia-based Armed America Radio host Mark Walters sued OpenAI, claiming that ChatGPT falsely stated that he had been charged with embezzlement.

Unlike Hood, Walters made no attempt to get ChatGPT’s allegedly libelous claims about him removed before filing his lawsuit. In Walters’ view, libel laws don’t require ChatGPT users to take any extra steps to inform OpenAI about defamatory content before filing claims. Walters is hoping the court will agree that if OpenAI knows its product is generating responses that defame people, it should be held liable for publishing defamatory statements.