Artist interpretation of the creatures talking about your mom on Xbox Live last night.

Aurich Lawson / Thinkstock

Anyone who’s worked in community moderation knows that finding and removing bad content becomes exponentially tougher as a communications platform reaches into the millions of daily users. To help with that problem, Microsoft says it’s turning to AI tools to help “accelerate” its Xbox moderation efforts, letting these systems automatically flag content for human review without needing a player report.

Microsoft’s latest Xbox transparency report—the company’s third public look at enforcement of its community standards enforcement—is the first to include a section on “advancing content moderation and platform safety with AI.” And that report specifically calls out two tools that the company says “enable us to achieve greater scale, elevate the capabilities of our human moderators, and reduce exposure to sensitive content.”

Microsoft says many of its Xbox safety systems are now powered by Community Sift, a moderation tool created by Microsoft subsidiary TwoHat. Among the “billions of human interactions” the Community Sift system has filtered this year are “over 36 million” Xbox player reports in 22 languages, according to the Microsoft report. The Community Sift system evaluates those player reports to see which ones need further attention from a human moderator.

That new filtering system hasn’t had an apparent effect on the total number of “reactive” enforcement actions (i.e., those in response to a player report) Microsoft has undertaken in recent months, though. The 2.47 million such enforcement actions taken in the first half of 2023 were down slightly from the 2.53 million enforcement actions in the first half of 2022. But that enforcement number now represents a larger proportion of the total number of player reports, which shrank from 33.08 million in early 2022 to 27.31 million in early 2023 (both numbers are way down from 52.05 million player reports issued in the first half of 2021).

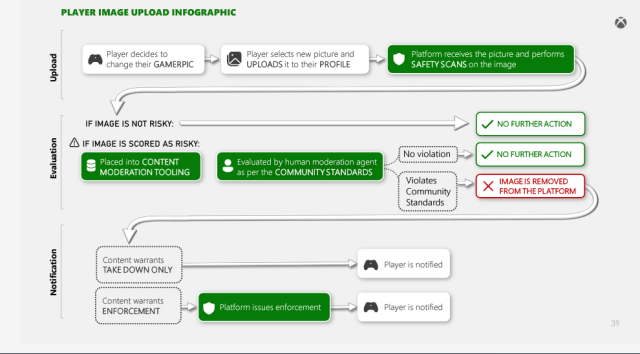

The shrinking number of player reports may be partly due to an increase in “proactive” enforcement, which Microsoft undertakes before any player has had a chance to report a problem. To aid in this process, Microsoft says it’s using the Turing Bletchley v3 AI model, an updated version of a tool Microsoft first launched in 2021.

This “vision-language” model automatically scans all “user-generated imagery” on the Xbox platform, including custom Gamerpics and other profile imagery, Microsoft says. The Bletchley system then uses “its world knowledge to understand the many nuances for what images are acceptable based on the Community Standards on the Xbox platform,” passing any suspect content to a queue for human moderation.

Microsoft says the Bletchley system contributed to the blocking of 4.7 million images in the first half of 2023, a 39 percent increase from the previous six months that Microsoft attributes to its AI investment.

Growth in “inauthentic” accounts

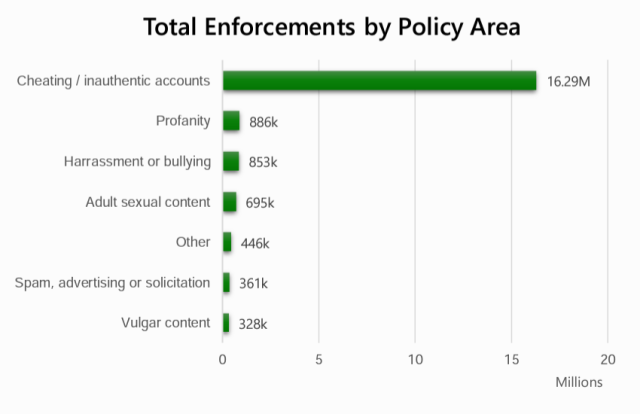

Such semi-automated image takedowns are dwarfed, however, by the 16.3 million enforcement actions Microsoft says are “centered around detecting accounts that have been tampered with or are being used in inauthentic ways.” This includes accounts used by cheaters, spammers, friend/follower account inflaters, and other accounts that “ultimately create an unlevel playing field for our players or detract from their experiences.”

Actions against these “inauthentic” accounts have exploded since last year, up 276 percent from the 4.33 million that were taken down in the first half of 2022. The vast majority of these accounts (99.5 percent) are taken down before a player has a chance to report them, and “often… before they can add harmful content to the platform,” Microsoft says.

Elsewhere in the report, Microsoft says it continues to see the impact of its 2022 decision to amend its definition of “vulgar content” on the Xbox platform to “include offensive gestures, sexualized content, and crude humor.” That definition helped lead to 328,000 enforcement actions against “vulgar” content in the first half of 2022, a 236 percent increase from the roughly 98,000 vulgar content takedown six months prior (which was itself a 450 percent increase from the six months before that). Despite this, vulgar content enforcement still ranks well behind plain old profanity (886,000 enforcement actions), harassment or bullying (853,000), “adult sexual content” (695,000), and spam (361,000) in the list of Xbox violation types.

Microsoft’s report also includes bad news for players hoping to get a ban or suspension overturned; only about 4.1 percent of over 280,000 such case reviews were reinstated in the first six months of 2023. That’s down slightly from the 6 percent of 151,000 appeals that were successful in the first half of 2022.

Since the period covered in this latest transparency report, Microsoft has rolled out a new standardized, eight-strike system laying out a sliding scale of penalties for different types and frequencies of infractions. It’ll be interesting to see if the next planned transparency report shows any change in player or enforcement behavior with those new rules in effect.